|

SOMAPHONY

|

|

SOMAPHONY is an audiovisual composition that can be

played as an instrument and is embodied in a physical

object representing the interface to the composition

itself. The interface is built with our Instrumentarium for

Audiovisual performance - SOMAPHONE - integrating

generative music and visualization in one combined

audio-visual instrument. We use

movement and gestures as a natural and expressive

interaction with reactive musical and visual content. An important aspect of our work on SOMAPHONY is the embodiment of a non-linear composition into a hardware/software object in such a way that the performer can focus on articulation, intonation and remix, resulting in a musical and visual re-composition. |

The project is focused on the interdependence between

a digital instrument and a performer and how the

performer can influence the behaviour of the instrument

with his/her biofeedback signals e.g. instead of artificially

applying pre-recorded groove - we use the slight

changes in the heart-rate to influence the computer

metronome or the fluctuations in the tension level of the

body. Generally we want to avoid any algorithmic

randomization and substitute it with the data acquired

from the movement and the bio-signals. Such strategy

results in more organic-like digital behaviours, which we

explore within our project SOMAPHONY. |

With such an approach we want to explore the notion of an

instrument that behaves differently from the linear,

cause-and-effect response to action that we're used to

expect from traditional instruments. Watch Somaphony video demo

|

|

|

|

|||

|

|

|

|

|

|

|

|

|

|

While developing SOMAPHONY we designed and

prototyped a performative audio-visual toolkit - our

Instrumentarium for audiovisual performance. It includes

hardware and software components that allow the

control, articulation and intonation of a non-linear audiovisual

composition in a natural and expressive way

through movement, gestures and biofeedback. |

In favour of a more creative and effective exploration of different strategies in the audiovisual synthesis, we took the decision to separate the hardware and software components into modules that can be interconnected in a variety of ways, easily and in real-time. We choose to implement our software using mainly the graphical programming languages VVVV - employed to process the signal analysis, generative visualizations and MAX/MSP - within Ableton Live - as a good choice for sound manipulation in real-time. Nevertheless, we want to keep our instrumentarium open to other sound synthesis platforms capable of reading OpenSoundControl(OSC) messages, for example: NI Reaktor or PureData. |

BIOFEEDBACK |

|

|

|

|||

|

|

|

|||

|

|

|

Photography by Dimiter Ovtcharov & Antoni Rayzhekov

|

The Instrumentarium consists of two pieces:

a portable wireless wearable device - SOMAPHONE and

SOMPAHONE Base Station employed for the main audio-visual

synthesis and control system.

The wearable device SOMAPHONE can be attached to

the body of the performer and is based on INTEL® EDISON equipped with sensors

for capturing information about the changes of the

biological processes occurring in the performer's body

such as heart-rate and body tension - measured

through the galvanic skin response, and also it is capable

of sensing body orientation and movement in space. |

The captured information is streamed wireless to the main

device - the SOMAPHONE Base Station via Bluetooth

4.0 LE for further analysis and is used in the

sonifcation process and to control the generative

music and visualizations in real-time.

The second piece of the Instrumentarium - the SOMAPHONE Base Station includes an INTEL® RealSense™ 3D camera F200, tracking the motion of the hands in 3D space, a touch-screen to control the audio-visual performance and a powerful mini computer - INTEL® NUC 5i7RYH - performing the actual audiovisual synthesis. The 3D camera is used to extract information about the movement, such as phrasing and segmentation, recognition of postures and gestures. The motion tracking and the biofeedback signal analysis, as well as the visual and musical synthesis are tightly interconnected and allow a digital artist to form feedback loops between different media and the performer. |

RESOURCES Sompahone Wearable - Enclosure 3D Model (pdf,stl)

version 2.0 by Nikola Malinov, Dimiter Ovtcharov Round housing for INTEL® EDISON using the SparkFun blocks: Battery, 9 Degrees of Freedom and ADC

Somaphone Wearable - Firmware (C++>)

version 0.1alpha by Antoni Rayzhekov a.k.a Zeos Reading the sensors input, processing and sending the information as OSC bundles

SOMAPHONE Wearable - Hardware Diagram (pdf)

version 1.0 by Antoni Rayzhekov Hardware components & Sensors

Sompahone Base Station - 3D Model extension lid (stl)

version 1.0 by Nikola Malinov Housing for INTEL® NUC (5i7RYH) including a 5" touch-screen and INTEL® RealSense™ F200 3D Camera

Sompahone Base Station - Top Plate (pdf)

version 1.0 by Dimiter Ovtcharov, Antoni Rayzhekov Sompahone top plate holding a 5" touch-screen and INTEL® RealSense™ F200 3D Camera

VVVV.Packs.RSSDK (C#,VVVV)

version 0.2alpha by Antoni Rayzhekov a.k.a Zeos; microdee(Uberact) Access INTEL® RealSense™ API from VVVV

LiveTrack

(C#, VVVV)

OSC messages parser for VVVV

OSCMaster/OSCMapper/OSCTrackControl (MAX/MSP)

version 0.1alpha by Antoni Rayzhekov a.k.a Zeos OSC Max4Live devices for Ableton Live

NOTE: We are continuously working to release more resources, design files and code in Q2 2016.

|

|

The project SOMAPHONY stems from a series of studies on

musical biofeedback and movement, undertaken during the creation of the project 10VE - participatory musical biofeedback and movement composition for two

actuators and an audience(2014/2015) by Antony Rayzhekov

and Katharina Köller. As a part of the

project development several experiments were

conducted based on sensory input, detecting body tension, breath

and heart-rate. The result was a special piece of hardware

and software, designed and created to enable the

performers to control the generative process of music

and visualizations through their biofeedback signals and

movements in real time. |

In the beginning of 2015 Intel contacted the artists

Antoni Rayzhekov and Katharina Köller and

commissioned an audiovisual performance using the

micro computer INTEL® EDISON. In mid 2015, the concept

for the SOMAPHONY audiovisual composition was

created. For the purpose of the project a state of the

art hardware/software instrumentarium was created

based on the experiences from the project 10VE.

Within the project development several hardware

components were additionally integrated into the

interactive audio-visual system, such as the INTEL®

RealSense™ 3D Camera and the compact INTEL® NUC computer kit. |

The SOMPAHONE Instrumentarium is developed in cooperation with INTEL® as a hardware provider and commissioner of the audiovisual performance SOMAPHONY. The project and its base components, such as the developed hardware, software and enclosures are published as open-source and aim to encourage, inspire and help other digital artists and peers to build upon and develop their own audiovisual interfaces based on movement and/or biofeedback. |

June/July 2015 | Logical scheme of the SOMAPHONE Wearable

|

September/October 2015 | First prototype of the SOMAPHONE Wearable used in the SOMPAHONY Demo 2015

|

November/December 2015 | Second prototype of the SOMAPHONE Wearable, before soldering and finishing

|

November/December 2015 | Second prototype of the SOMAPHONE Wearable

|

||||

July 2015 | Test setup for the SOMAPHONY DEMO

|

October 2015 | First prototype of SOMAPHONE Base Station used in SOMAPHONY Demo 2015. We mapped a part of the projection on the top of the NUC, to give visual feedback to the performer. Later we will construct a special housing to hold the camera and we will add built-in a touch-screen

|

November 2015 | 3D Model of the second prototype of the SOMAPHONE Base Station.

|

December 2015 - January 2016 | Second prototype of the SOMAPHONE Base Station. Standalone device with built-in INTEL® RealSense™ 3D Camera and a touch-screen. |

||||

July - September 2015 | INTEL® RealSense™ API for VVVV

|

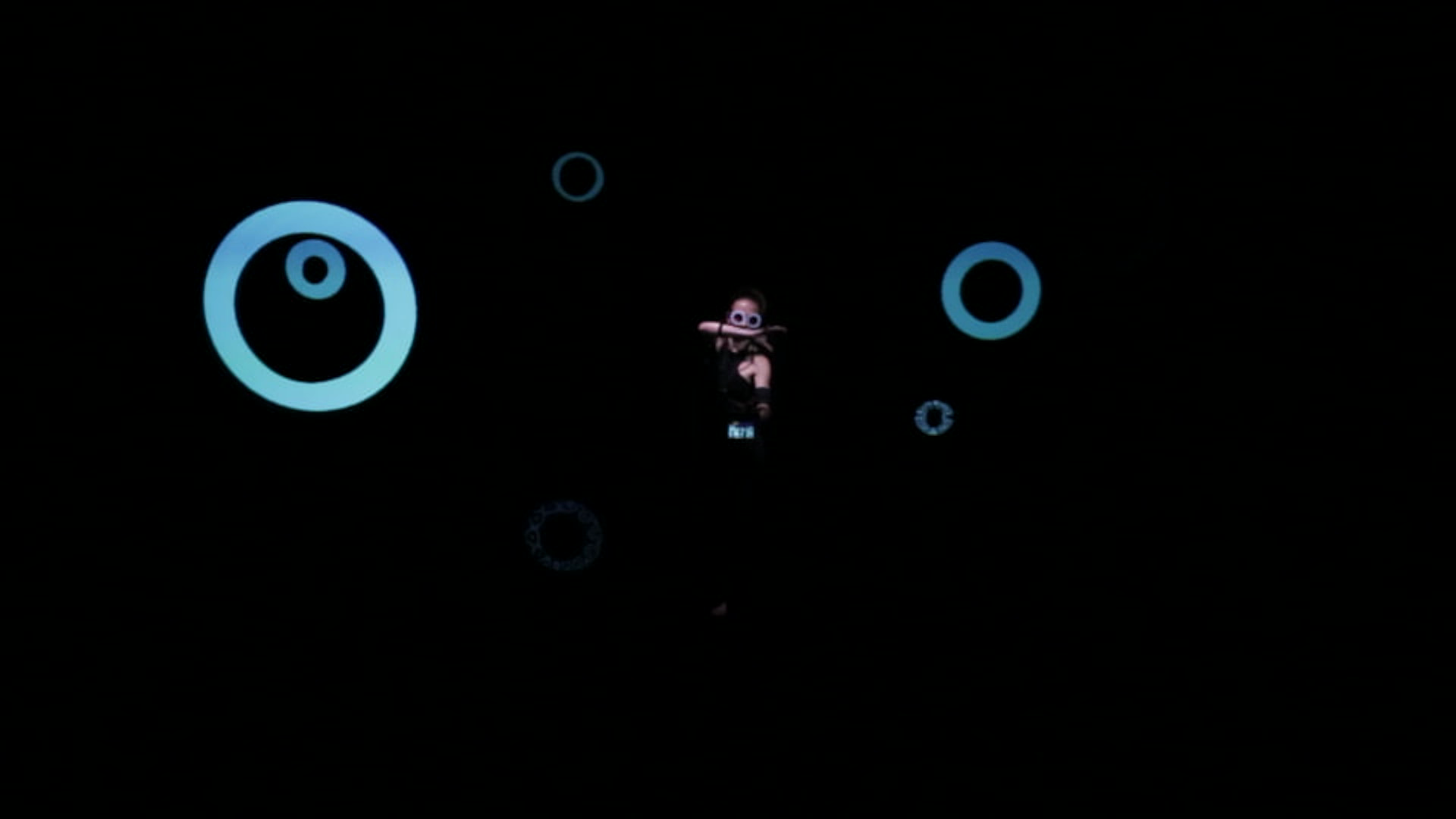

October 2015 | Still from SOMPAHONY demo video

|

February 2016 | Still from Introducing SOMPAHONE video

|

February 2016 | SOMPAHONE Base Station User Interface

|

Antoni Rayzhekovconcept, software, hardware, sound, visuals, videography Katharina Köllerperformer, communication, costume, sensor electrodes Dimiter OvtcharovSomaphone toolkit assembly, camera, photography, assistance Nikola MalinovSomaphone toolkit 3D modeling |

Special Thanks Intel Division, Vienna

|

Contactping {at} somaphonics {dot} com |

|

(cc) Some Rights Reserved! 2015-2016 SOMAPHONICS |

|

All trademarks and registered trademarks |